What is Recursion?

Recursion is a powerful and elegant technique that forms the backbone of many algorithms. It allows a function to call itself repeatedly until a specific condition is met, enabling the solution of complex problems by breaking them down into simpler subproblems.

Anatomy of a Recursive function

Every recursive function consists of two essential parts: the base condition and the recursive call.

Base Condition: The base condition is the foundation of a recursive function. It defines the scenario where the function can provide a direct solution without the need for further recursive calls, acting as a termination point to prevent infinite loops. The base condition represents the simplest form of the problem that can be solved immediately.

Recursive Call: The recursive call is where the function invokes itself with a modified version of the original problem. The function approaches the base condition by gradually reducing the problem into smaller, more manageable subproblems. The results of these recursive calls are then combined or processed to yield the final solution.

The cooperation between the base condition and the recursive call is what makes recursion a powerful tool. The base condition ensures that the recursion terminates, while the recursive call allows the function to tackle complex problems by breaking them down into simpler ones.

Here’s the general structure of a recursive function:

function recursiveFunction(parameters) {

if (baseCondition) {

// Base case: Return a direct solution

return baseResult;

} else {

// Recursive case: Modify parameters for the next recursive call

const modifiedParameters = ...;

// Invoke the recursive function with modified parameters

const recursiveResult = recursiveFunction(modifiedParameters);

// Process or combine the recursive result

const finalResult = ...;

return finalResult;

}

}Types of Recursion

Recursion can be classified into different types based on how the recursive calls are made. Let’s explore each type with examples, use cases, pros, and cons.

Direct Recursion

In direct recursion, a function calls itself directly within its own body.

Example:

function factorial(n) {

if (n === 0) {

return 1;

}

return n * factorial(n - 1);

}

console.log(factorial(5)); // Output: 120Use cases:

- Calculating factorials

- Traversing tree-like data structures (e.g., binary trees, file systems)

- Implementing recursive algorithms (e.g., Tower of Hanoi, Fibonacci sequence)

Pros:

- Simple and intuitive to understand and implement

- Suitable for problems with a clear recursive structure

Cons:

- Can lead to stack overflow if the recursion depth is too large

- May be less efficient compared to iterative solutions for certain problems

Indirect Recursion

Indirect recursion occurs when a function calls another function, which in turn calls the original function directly or indirectly.

Example:

function isEven(n) {

if (n === 0) {

return true;

}

return isOdd(n - 1);

}

function isOdd(n) {

if (n === 0) {

return false;

}

return isEven(n - 1);

}

console.log(isEven(4)); // Output: true

console.log(isOdd(5)); // Output: trueUse cases:

- Implementing mutually recursive functions (e.g., even/odd, is_palindrome)

- Solving problems that can be divided into interrelated subproblems

Pros:

- Allows for modular and organized code structure due to each function having a specific role.

- Can make the logic more readable and maintainable due to each function handling a distinct part of the problem.

Cons:

- Even the modularity and separation of concerns makes it readable at a glance, it can be still harder to understand since it involves multiple functions calling each other. This can make the flow of execution less straightforward compared to direct recursion, where you only deal with a single function calling itself.

- It can result in deeper recursion depths since multiple functions are involved in the recursive process. This increases the risk of stack overflow if the recursion goes too deep, as each function call adds a new frame to the call stack.

Tail Recursion

A recursive function is considered tail recursive if the recursive call is the last thing executed by the function. There is no need to keep a record of the previous state – in other words, there’s no additional computation after the recursive call returns.

Example:

function sumNumbers(n, accumulator = 0) {

if (n === 0) {

return accumulator;

}

return sumNumbers(n - 1, accumulator + n);

}

console.log(sumNumbers(5)); // Output: 15Use cases:

-

Optimizing recursive algorithms by simplifying the recursive structure to reduce the amount of recursive calls

- Example: A tail-recursive version of the naive implementation of Fibonacci sequence (more details on Optimizing Recursive functions section).

- Tail call optimization (TCO) can also further enhance performance by optimizing these calls at the engine level. In terms of Javascript, TCO is only supported by the Safari engine.

- Avoiding stack overflow in languages (or engines) that support tail call optimization

Pros:

- Can be optimized by the compiler or interpreter for better performance

- Reduces the risk of stack overflow in supported languages / engines

Cons:

- Not all programming languages support tail call optimization in the engine level

- May require additional parameters or helper functions to maintain the state

Non-Tail Recursion

A recursive function is considered non-tail recursive if the recursive call is not the last thing executed by the function. After returning from the recursive call, there is something left to evaluate (notice the console log after the reversePrint function below).

Example:

function reversePrint(n) {

if (n === 0) {

return;

}

reversePrint(n - 1);

console.log(n);

}

reversePrint(5);

// Output:

// 1

// 2

// 3

// 4

// 5Use cases:

- Generating permutations or combinations

- Traversing and processing data structures in a post-order manner

Pros:

- Allows for post-processing or evaluation after the recursive call

- Suitable for problems that require backtracking or post-order traversal

Cons:

- Can lead to stack overflow if the recursion depth is too large

- May be less efficient compared to tail recursive or iterative solutions

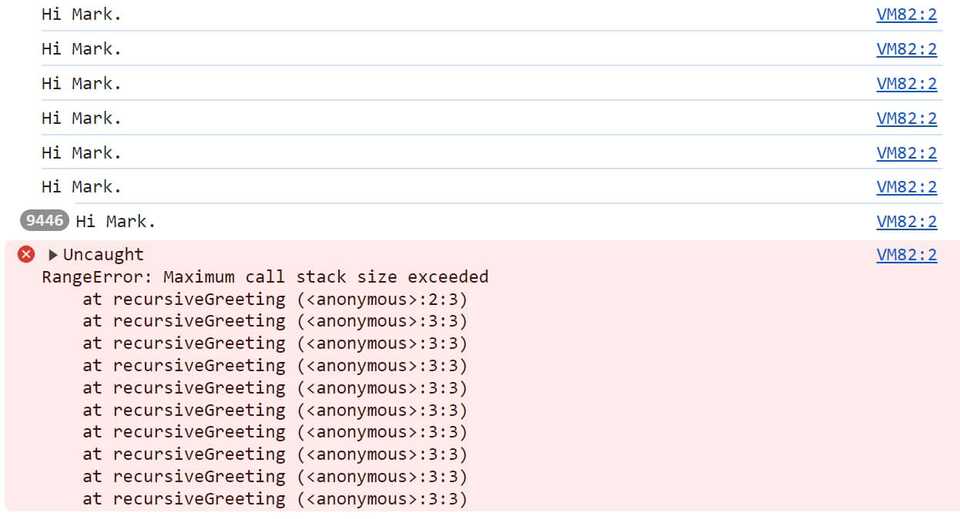

Infinite Recursion and Stack Overflow Errors

It is critical that the recursive calls eventually reach the base condition. Without a well-defined base condition, the function may continue calling itself indefinitely, leading to infinite recursion and a stack overflow error.

Consider the following example of a recursive function without a base condition:

function recursiveGreeting(name) {

console.log(`Hi ${name}.`);

recursiveGreeting(name);

}

recursiveGreeting("Mark");In this case, the recursiveGreeting function lacks a base condition. When invoked with the argument "Mark", it will print "Hi Mark." and then call itself with the same argument. This process will repeat indefinitely, causing an infinite loop. The function will keep printing "Hi Mark." until the call stack reaches its limit, resulting in a stack overflow error:

However, stack overflow errors can also occur in functions with a base condition if the input data is too large. For example, calculating the factorial of a very large number using a simple recursive function without optimization can also lead to a stack overflow due to the excessive depth of recursive calls.

Which brings us to the next topic: to effectively work with recursive functions, it’s not only essential to understand the anatomy of recursion but also to comprehend how the call stack operates. The call stack is an “unseen” key element that must be considered when working with recursive problems.

Understanding the Call Stack

The call stack is a fundamental data structure used by Javascript Engine (similarly in many other programming languages) to manage function execution, evaluations, and the program’s execution flow “under the hood”.

When a script calls a function, Javascript creates a new execution context for that function and pushes it onto the call stack. This execution context includes information such as the function’s parameters, local variables, and the location to return to when the function completes. If the function makes further function calls or evaluations, additional execution contexts are pushed onto the stack.

The call stack operates on the principle of “last in, first out” (LIFO), meaning that the last execution context pushed onto the stack is the first one to be popped off when it completes. Javascript continuously executes the code in the execution context at the top of the stack. When a function completes, its execution context is popped off the stack, and the program resumes execution from the previous execution context.

Let’s consider a simple example to illustrate how the call stack behaves with non-nested function calls:

Recorded gif from JS Visualizer 9000 tool: https://www.jsv9000.app/

Code:

function one() {

return 'one';

}

function two() {

return 'two';

}

function three() {

return 'three';

}

one();

two();

three();Here’s what happens with the call stack:

-

one()is called:oneis pushed onto the call stack.oneexecutes and returns the string'one'.oneis popped off the call stack.

-

two()is called:twois pushed onto the call stack.twoexecutes and returns the string'two'.twois popped off the call stack.

-

three()is called:threeis pushed onto the call stack.threeexecutes and returns the string'three'.threeis popped off the call stack.

In this scenario, each function call is independent and completes before the next function is called. The call stack never holds more than one function at a time, as each function “comes and goes” in a sequential manner.

Now, let’s examine how the call stack handles nested function calls:

Recorded gif from JS Visualizer 9000 tool: https://www.jsv9000.app/

Code:

function one() {

return two();

}

function two() {

return three();

}

function three() {

return 'done!';

}

one();Here’s what happens with the call stack when we’re dealing with nested calls:

-

one()is called:oneis pushed onto the call stack.onecallstwo().

-

two()is called byone():twois pushed onto the call stack on top ofone.twocallsthree().

-

three()is called bytwo():threeis pushed onto the call stack on top oftwo.threeexecutes and returns the string'done!'.threeis popped off the call stack.

-

The return value from

three()is used bytwo():twocontinues execution, receives the string'done!', and returns it.twois popped off the call stack.

-

The return value from

two()is used byone():onecontinues execution, receives the string'done!', and returns it.oneis popped off the call stack.

In this case, the call stack holds multiple functions simultaneously due to the nested calls to manage more complex execution flows.

The Call Stack and Recursion:

Recursion relies heavily on the call stack to manage the multiple instances of the recursive function. Each recursive call creates a new frame on the call stack with its own execution context (variables and parameters).

Consider a simple example of a recursive function that counts down from a number:

Recorded gif from JS Visualizer 9000 tool: https://www.jsv9000.app/

Code:

function recursiveCountdown(n) {

if (n === 0) {

return;

}

return recursiveCountdown(n - 1);

}

recursiveCountdown(4);Here’s what happens in the call stack when we’re dealing with this recursive function:

recursiveCountdown(4)is called and pushed onto the stack.- Since

nis not 0, it callsrecursiveCountdown(3)and pushes it onto the stack. - This process continues, pushing

recursiveCountdown(2),recursiveCountdown(1), andrecursiveCountdown(0)onto the stack. - When

recursiveCountdown(0)is called, the base case is met, and it returnsundefinedand popsrecursiveCountdown(0)off the stack. - The return value (

undefined) is passed up torecursiveCountdown(1), which then returns and pops off the stack. - This process continues until

recursiveCountdown(4)receives the return value fromrecursiveCountdown(3)and completes, ultimately clearing the stack.

As you see, even a small operation can fill up the call stack pretty quickly. Therefore it’s critical to be cautious of the call stack’s size when working with recursion. If the recursion depth becomes too large or if there is no base case to terminate the recursion, the call stack can overflow. This happens when the maximum stack size is exceeded, and the program runs out of memory to store additional execution contexts.

Optimizing Recursive functions

Recursive functions can be powerful and expressive, but they can also suffer from performance issues if not optimized properly. One common problem that arises with recursive functions is redundant calculations, where the same subproblems are solved multiple times. This can lead to exponential time complexity and inefficient use of resources.

Whenever we discuss optimizing an algorithm, we are essentially focusing on improving its time and space complexity. This section assumes that you are at least somewhat familiar with Big O notation. If you need a quick refresher, I recommend starting with the article below and then returning here to continue:

Comprehensive Big O Notation Guide in Plain English, using Javascript

Case study: Fibonacci Sequence Problem

To illustrate this, let’s take a look at the famous Fibonacci sequence problem and explore how we can optimize its recursive solution. The Fibonacci sequence is a series of numbers where each number is the sum of the two preceding ones. The sequence starts with 0 and 1, and goes on infinitely:

0, 1, 1, 2, 3, 5, 8, 13, 21, 34, …

Naive Recursive Implementation

A straightforward recursive implementation of the Fibonacci sequence can be written as follows:

function fibonacci(n) {

if (n <= 1) {

return n;

}

return fibonacci(n - 1) + fibonacci(n - 2);

}In this implementation, the base condition is when n is less than or equal to 1, in which case we simply return n. For any value of n greater than 1, we recursively call the fibonacci function with n - 1 and n - 2 and add their results to obtain the Fibonacci number at position n.

While this implementation is concise and easy to understand, it suffers from a major performance issue. Let’s analyze the time complexity of this approach.

Time Complexity: O(2^n)

The time complexity of the naive recursive Fibonacci implementation can be represented by the recurrence relation:

T(n) = T(n-1) + T(n-2) + O(1)This means that to calculate the Fibonacci number at position n, we need to calculate the Fibonacci numbers at positions n-1 and n-2, and then perform a constant-time operation (addition).

The recursive calls form a binary tree-like structure, where each node represents a function call. The height of this tree is n, and the number of nodes in the tree grows exponentially with n. In fact, the time complexity of this naive recursive approach is exponential, approximately O(2^n). In simpler words, each function is calling 2 functions, those 2 functions are calling 4 functions, and so on...

To understand why, let’s look at a small example. Consider the calculation of the 5th Fibonacci number:

fibonacci(5)

-> fibonacci(4) + fibonacci(3)

-> (fibonacci(3) + fibonacci(2)) + (fibonacci(2) + fibonacci(1))

-> ((fibonacci(2) + fibonacci(1)) + (fibonacci(1) + fibonacci(0))) +

((fibonacci(1) + fibonacci(0)) + fibonacci(1))

-> (((fibonacci(1) + fibonacci(0)) + fibonacci(1)) + (1 + 0)) +

((1 + 0) + 1)As we can see, the number of function calls grows exponentially with each increasing value of n. Each function call leads to two more function calls, resulting in a binary tree structure. The number of nodes in this tree is approximately 2^n, leading to an exponential time complexity.

Space Complexity: O(n)

The space complexity of the naive recursive Fibonacci implementation is O(n). This is because the maximum depth of the recursive call stack is proportional to the input value n. Each recursive call adds a new frame to the call stack, consuming additional memory.

In the worst case, when n is large, the recursive calls will reach a depth of n before starting to return. This means that the call stack will contain n frames, each storing the local variables and function call information.

It’s important to note that the space complexity is not exponential like the time complexity. While the time complexity grows exponentially with n, the space complexity grows linearly with n due to the linear growth of the call stack.

To summarize:

- The time complexity of the naive recursive Fibonacci implementation is O(2^n), which is exponential.

- The space complexity is O(n) due to the linear growth of the call stack with respect to the input value

n.

Now let’s explore different optimization techniques to improve the performance of the recursive Fibonacci function:

1 - Memoization

Memoization is a technique where we store the results of expensive function calls and return the cached result when the same inputs occur again. This optimization can significantly improve the performance of recursive functions by eliminating redundant calculations.

Here’s an example of applying memoization to the Fibonacci function:

function fibonacciMemo(n, memo = {}) {

if (n in memo) {

return memo[n];

}

if (n <= 1) {

return n;

}

memo[n] = fibonacciMemo(n - 1, memo) + fibonacciMemo(n - 2, memo);

return memo[n];

}In this optimized version, we introduce an object memo to store the previously calculated Fibonacci numbers. Before making a recursive call, we check if the result for the current input n is already available in the memo object. If it is, we return the memoized result directly. Otherwise, we proceed with the recursive calls and store the result in the memo object before returning it.

Time Complexity: O(n)

With memoization, each Fibonacci number is calculated only once. The recursive calls are made only for numbers that have not been previously calculated and memoized. This reduces the time complexity from exponential to linear, as there are only n unique subproblems to solve.

Space Complexity: O(n)

The space complexity of the memoized solution is O(n) because the memo object stores the results of each subproblem. In the worst case, the memo object will contain all the Fibonacci numbers from 0 to n.

Use cases:

- When the recursive function performs redundant calculations

- When the recursive function has overlapping subproblems

Pros:

- Eliminates redundant calculations

- Significantly improves the time complexity (from exponential to linear)

Cons:

- Requires extra space to store the memoized results

- May not be suitable for all recursive problems

2 - Tail Call Optimization

Tail call optimization is a technique used by some programming languages / engines to optimize recursive functions. It allows the compiler or interpreter to reuse the same callstack frame for recursive calls, thereby avoiding the overhead of creating new stack frames.

To take advantage of tail call optimization, we need to rewrite the recursive function in a way that the recursive call is the last operation performed. Here’s an example of the Fibonacci function optimized for tail calls:

function fibonacciTailCall(n, a = 0, b = 1) {

if (n === 0) {

return a;

}

if (n === 1) {

return b;

}

return fibonacciTailCall(n - 1, b, a + b);

}In this version, we introduce two additional parameters a and b to keep track of the previous two Fibonacci numbers. The recursive call is made with n - 1, and the values of a and b are updated accordingly. The base conditions handle the cases when n is 0 or 1.

Time Complexity: O(n)

The tail-recursive solution has a time complexity of O(n) because it makes n recursive calls, each taking constant time. This time complexity remains the same regardless of whether tail call optimization is supported or not.

Space Complexity: O(n) or O(1)

The space complexity of the tail-recursive solution depends on whether the Javascript engine supports tail call optimization or not.

- If tail call optimization is supported, the space complexity is reduced to O(1) because the recursive calls are optimized to reuse the same stack frame. This eliminates the need for additional memory to store the call stack.

- If tail call optimization is not supported, the space complexity remains O(n) because each recursive call will consume an additional stack frame, similar to the naive recursive approach.

It’s worth noting that tail call optimization is not consistently available across all Javascript engines. As of today, TCO is only supported by Safari (in strict mode). This means that the actual space complexity of the tail-recursive solution may vary depending on the execution environment.

Use cases:

- When the recursive function can be transformed into a tail-recursive form

- When the programming language or specific implementation supports tail call optimization

Pros:

- Avoids stack overflow errors for deep recursions (if tail call optimization is supported)

- Optimizes memory usage by reusing stack frames (if tail call optimization is supported)

Cons:

- Not all Javascript engines support tail call optimization consistently

- May require additional parameters and manipulation of the recursive function

To keep the consistent behavior across different Javascript environments, it’s generally recommended to rely on other optimization techniques that have more predictable performance characteristics.

3 - Trampolining

Trampolining is a technique used to overcome the limitations of stack overflow in recursive functions. It involves separating the recursive function into a trampoline function that handles the recursive calls iteratively. This technique is particularly useful in Javascript, as it provides a way to manage deep recursion across different engines to prevent the call stack to exceed its limit.

Here’s an example of applying trampolining to the Fibonacci function:

function trampoline(fn) {

return function(...args) {

let result = fn(...args);

while (typeof result === 'function') {

result = result();

}

return result;

};

}

function fibonacciTrampoline(n, a = 0, b = 1) {

if (n === 0) {

return a;

}

if (n === 1) {

return b;

}

return () => fibonacciTrampoline(n - 1, b, a + b);

}

const trampolinedFibonacci = trampoline(fibonacciTrampoline);

trampolinedFibonacci(5); // Output: 5In this variant, the trampoline function is defined separately. It takes a recursive function fn as an argument and returns a new function that handles the recursive calls iteratively. The fibonacciTrampoline function remains the same as before, returning a function that encapsulates the next recursive step.

The trampoline function repeatedly calls the returned function until a non-function value is returned, which represents the final result. Finally, we create a trampolinedFibonacci function by passing fibonacciTrampoline to the trampoline function.

Time Complexity: O(n)

The trampolined solution has a time complexity of O(n) because it performs n iterations in the trampoline function. Each iteration invokes the returned function, which represents the next step of the recursion.

Space Complexity: O(n)

The space complexity of the trampolined solution is O(n) because the closure returned by fibonacciTrampoline captures the current state of the computation. In the worst case, there will be n closures created, each capturing the values of n, a, and b.

Use cases:

- When the recursive function has a deep recursion depth

- When the programming language / engine does not support tail call optimization

Pros:

- Avoids stack overflow errors by breaking down the recursion into smaller steps

- Allows for deep recursions without exceeding the call stack limit

Cons:

- Requires additional code to handle the trampolining logic

- May have slightly slower performance compared to direct recursion

Take a look at the visual comparison below to see how trampolining effectively manages the call stack compared to the naive recursive approach:

Call stack visualization using the naive approach:

Recorded gif from JS Visualizer 9000 tool: https://www.jsv9000.app/

Call stack visualization using the trampoline optimization:

Recorded gif from JS Visualizer 9000 tool: https://www.jsv9000.app/

4 - Opting for an Iterative Approach

While recursive solutions can be elegant and expressive, sometimes an iterative approach can be more efficient in terms of time and space complexity. However, it’s important to note that iterative variants of recursive functions can often be more complex and harder to understand compared to their recursive counterparts. Let’s consider an iterative variant of the Fibonacci problem:

function fibonacciIterative(n) {

if (n <= 1) {

return n;

}

let a = 0;

let b = 1;

for (let i = 2; i <= n; i++) {

const temp = b;

b = a + b;

a = temp;

}

return b;

}In this iterative solution, we start with the base cases for n <= 1. Then, we initialize two variables a and b to keep track of the previous two Fibonacci numbers. We iterate from 2 to n, updating the values of a and b in each iteration. Finally, we return the value of b, which represents the Fibonacci number at position n.

Time Complexity: O(n)

The iterative solution has a time complexity of O(n) because it iterates from 2 to n, performing constant-time operations in each iteration. The number of iterations is directly proportional to the input value n.

Space Complexity: O(1)

The space complexity of the iterative solution is O(1) because it only uses a constant amount of additional memory to store the variables a, b, and temp. The space required does not depend on the input value n.

Use cases:

- When the recursive solution exceeds the maximum call stack size

- When the problem requires a large number of iterations

Pros:

- Often more efficient in terms of time and space complexity

- Avoids the overhead of function calls and stack management

Cons:

- Can be more complex and harder to understand compared to recursive solutions

- Requires explicit state management (e.g., variables to track previous values)

- May lack the elegance and expressiveness of recursive solutions

Optimization summary

Just by looking at the time and space complexity of each optimization technique, we can see how they improve upon the naive recursive approach:

- Memoization reduces the time complexity from O(2^n) exponential to O(n) linear, but space complexity stays at O(n) linear to store the memoized results.

- Tail call optimization maintains the O(n) linear time complexity, if the language / engine supports TPO it reduces the space complexity to O(1) constant by reusing stack frames, else space complexity stays at O(n) linear.

- Trampolining also maintains the O(n) linear time complexity but has a space complexity of O(n) linear due to the creation of closures.

- The iterative approach achieves optimization on both ends - O(n) linear time complexity and O(1) constant space complexity, making it the most efficient in terms of both time and space. But at this point, we no longer have a recursive function.

While the iterative solution is the most efficient among them, it comes at the cost of reduced readability and increased complexity.

Recursive solutions, on the other hand, often have a more natural and intuitive structure that aligns with the problem’s definition. They can be easier to understand and reason about, especially for problems that have a clear recursive nature.

When deciding between a recursive or iterative approach, it’s also important to consider not only the efficiency aspects but also the readability and maintainability of the code. In some cases, a recursive solution may be preferable due to its simplicity and expressiveness, even if it has slightly higher time or space complexity compared to an iterative approach.

The choice between recursion and iteration depends on the specific problem, the constraints of the system, and the balance between efficiency and code clarity. It’s all about comparing the trade-offs and choose the approach that best fits the given scenario while prioritizing code readability and maintainability.

I hope this article helped you to understand what a recursion is, how to work with it and how to optimize recursive functions depending on your use case. Thanks for reading!